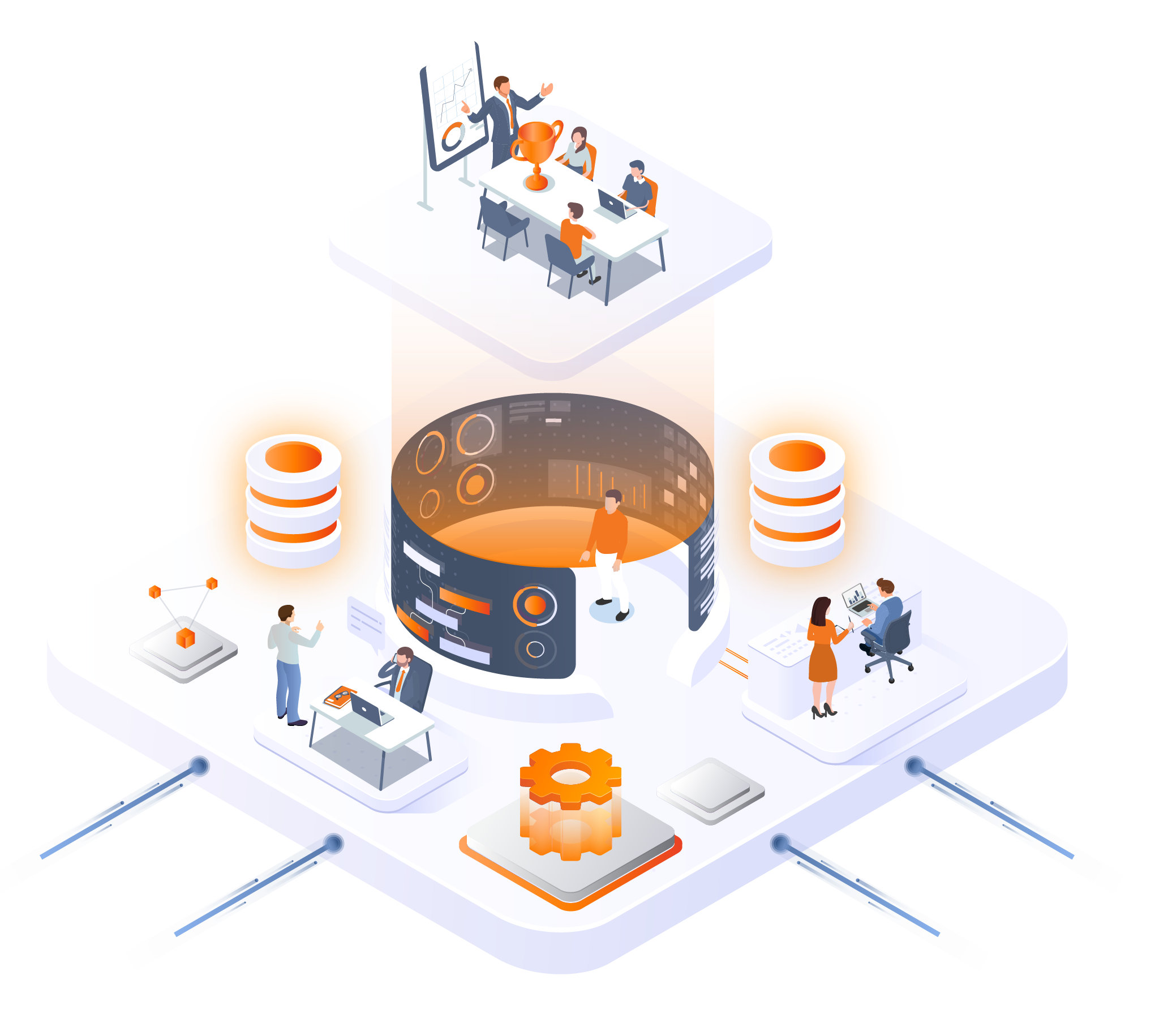

Migrating data from on-premise data warehouses to the cloud allows organizations to achieve scalability, flexibility, and performance at a lower cost.

At DataFactZ, we understand both the technical and operational challenges in cloud migrations. By preparing a tailored roadmap for cloud migration, our techno-functional experts are saving enterprises from costly migration failures.

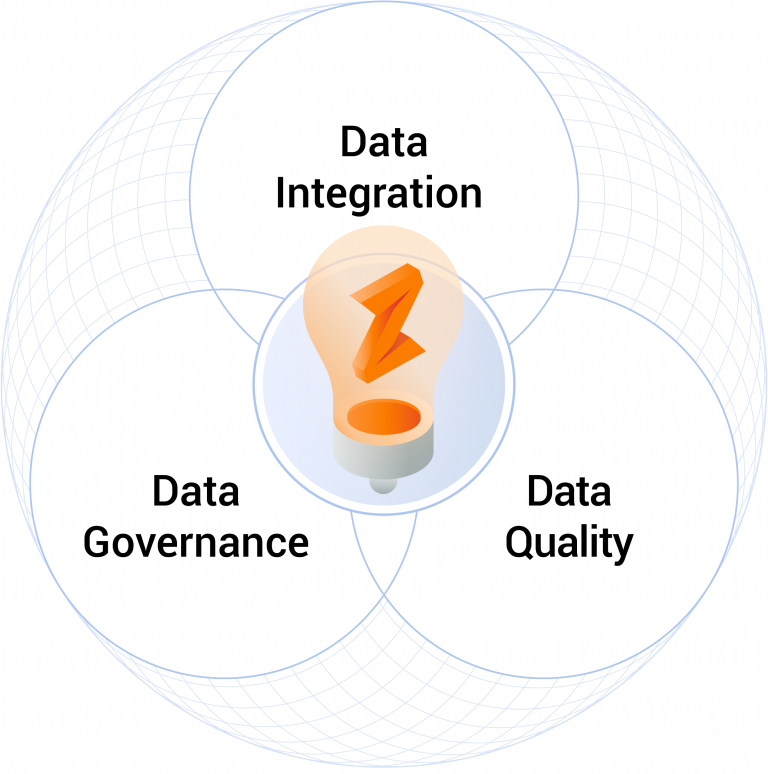

Our cloud migration approaches majorly fall into these 3 categories: