Two scientists are lost at the top of a mountain.

Scientist #1: Pulls out a map and looks at it for a while. Then he turns to Scientist #2 and says: “Hey, I’ve figured it out. I know where we are.”

Scientist #2: Where are we then?

Scientist #1: Do you see this mountain on the map?

Scientist: # 2: Yes

Scientist #1: Well… THAT’S where we are.

Well, that’s the truth and the real state of cognitive computing systems out there in the market place today. So let us first try to understand the basic definition on “What is cognitive computing?”

Here are some definitions from the internet.

Definition: #1 Cognitive computing systems redefine the nature of the relationship between people and their increasingly pervasive digital environment.

Confusing isn’t it?

Definition: #2: Cognitive computing is the simulation of human thought processes in a computerized model. Cognitive computing involves self-learning systems that use data mining, pattern recognition and natural language processing to mimic the way the human brain works.

Even more confusing?

Let me break down the definition into simple terms:

A system that can exhibit or simulate the behavior of a human brain is a Cognitive System. In general this type of system can be classified into Artificial Intelligence. Technically, a cognitive system should also possess the following characteristics:

- Understand language and human interactions

- Generates and evaluates evidence based on hypothesis

- Possess Self-Learning Capabilities

Here is a simple example.

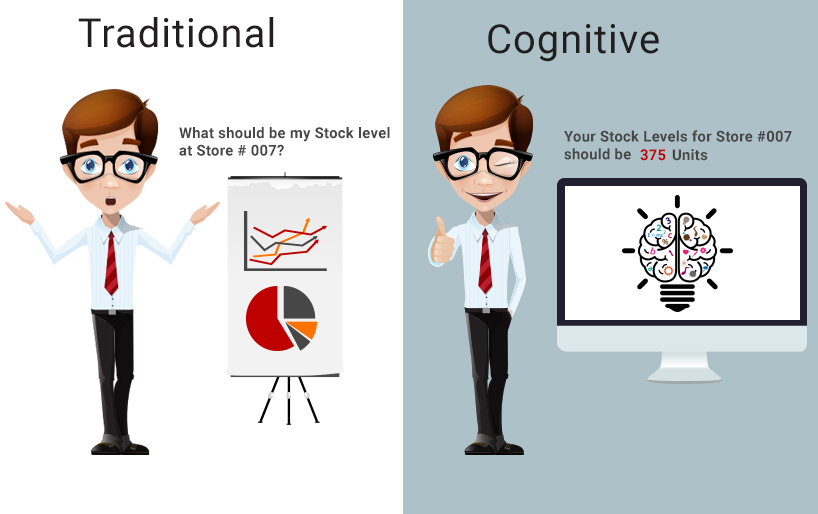

Without a Cognitive System

John, who is the V. P of Merchandise planning for a major retail enterprise is trying to allocate stock levels at store # 007. So, he is looking at few operational reports that tell him about the past trends, sales history etc. and assessed that this particular store had 400 units in stock last year and also noticed that they were out of stock before the end of the season. Now, the real challenge for John is to determine how many units he should maintain in the upcoming season. His business experience tells him that he should maintain 450 units based on simple factors such as sales, trends etc. But he is worried that his decision could look naïve if it results in excess stock which results in loss of revenue. So how can John make an intelligent decision?

With a Cognitive System

In the same scenario, a system would tell John that the ideal number of units to maintain is 375 units at Store #007 this season. John would also be informed by this system of the reasoning and evidence behind this prescription. In this case the system augmented the human brain in making a decision. (i.e.) the system exhibited the behavior of a human brain but most importantly it was able to arrive at a decision by evaluating an evidence based on a hypothesis.

So what could be the evidence based on hypothesis in this scenario? The system was able to correlate data from several data sources including external sources of data such as demographics, competition, pricing etc. In this case the system recommended 375 units because it assessed a decrease in the demographics or demand for certain product types which perhaps have generated no interest from social media, surveys or sensed a high level of competition in that particular area. Now you can imagine the permutations that could come into play for this situation.

Also assume that John was able to converse with a system in a natural language this entire time and was able to assess the outcome or the impact of revenue by changing the stock levels to 380, 400, 390 etc.

Conclusion:

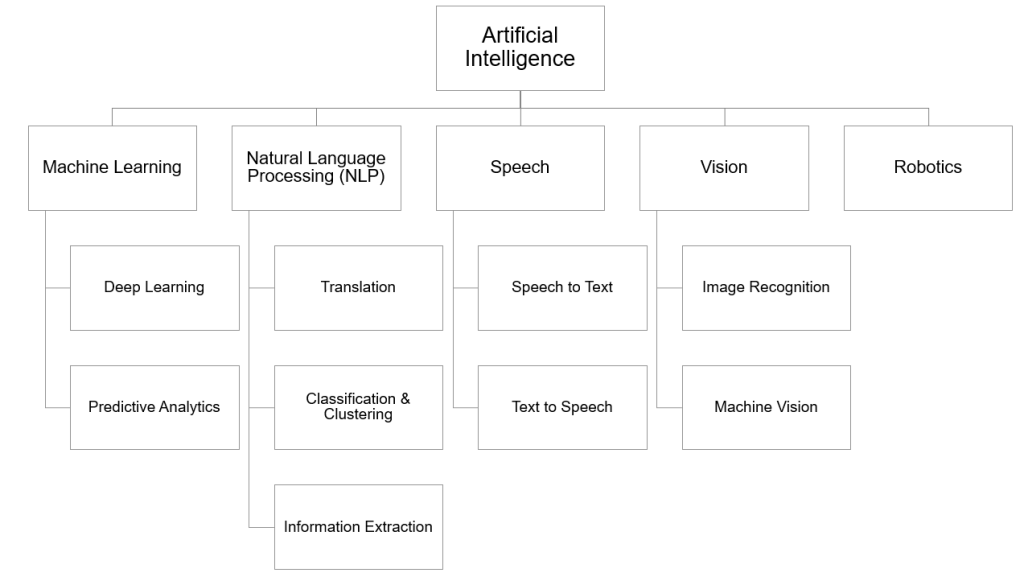

Simulating higher functions of the human brain and programming a computer to use general language are some of the original aspects of Artificial Intelligence (AI) that originated in 1955. AI was not practical because we did not have systems that can scale but today it is a different story. We are directly or indirectly leveraging bit and pieces of AI in our day to day life and especially with the revolution of IOT, AI now is becoming more and more real. Below is a picture that illustrates different areas that fall under the area of Artificial Intelligence.

All the fiction that we watched in movies such as Minority Report etc. could very well become a possibility and I certainly know that several startups in the mid-west and the Silicon Valley are building cutting edge and state of the art cognitive systems. Let us wish them all the best and hopefully they will hit the ground running very soon and prove the world that cognitive computing is not a myth.

All the fiction that we watched in movies such as Minority Report etc. could very well become a possibility and I certainly know that several startups in the mid-west and the Silicon Valley are building cutting edge and state of the art cognitive systems. Let us wish them all the best and hopefully they will hit the ground running very soon and prove the world that cognitive computing is not a myth.